Take a seat and grab some popcorn, because the recent turmoil within OpenAI has all the makings of a blockbuster movie. With rumors of mass walkouts, Sam Altman returns, and potential mergers, it’s been quite the roller coaster ride for this artificial intelligence powerhouse.

The Staff Revolt: A Showdown Like No Other

Only two days ago, an astounding revelation came to light – over 700 of OpenAI’s grand total of 775 employees threatened to depart unless the board steps down. It’s not every day we see the AI community’s version of a standoff, where the weapons of choice are coding prowess and neural network knowledge.

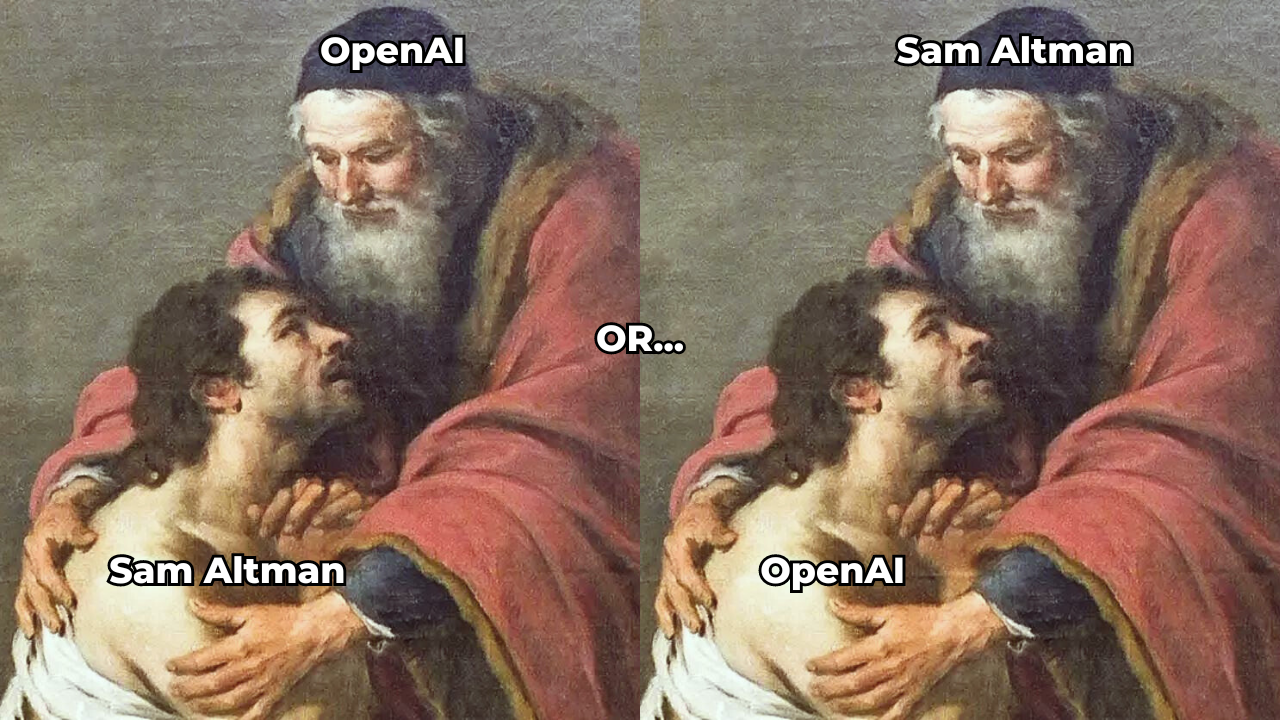

The Return of the Prodigal? Not Quite a Parallel Story, but Still a Twist Worth Watching

In an unexpected turn, OpenAI confirmed the return of Sam Altman as CEO on Tuesday, November 21st. This isn’t your typical prodigal son story though. Altman didn’t return out of remorse or necessity; instead, it was the staff at OpenAI that championed for his comeback. Even Microsoft’s CEO, Satya Nadella, an investor in OpenAI, seemed open to his return. Rather than a tale of repentance and forgiveness, what we have here is a compelling account of leadership, power dynamics, and workforce influence making waves in Silicon Valley.

The Unexpected Alliance: A Whispered Merger

In a surprising turn of events, there are rumors of a proposed merger between OpenAI and its competitor, Anthropic. This potential fusion could send shockwaves throughout the AI industry. Meanwhile, hints suggest that some of OpenAI’s clients might be considering switching loyalties, further intensifying this unfolding narrative.

As if these developments weren’t thrilling enough, there are reports from Reuters that OpenAI’s investors are considering legal action against the board. It’s as if we’re watching a chess match, with each piece representing a different stakeholder in this intricate game.

The Recruitment Feeding Frenzy: Competitors Circling

Competing organizations, including those led by influential figures like Elon Musk and Marc Benioff, are reportedly viewing this turmoil as an opportunity to recruit OpenAI’s potentially departing staff. It’s a veritable feeding frenzy in the tech world, with the sharks sensing blood in the water.

The Great Divide

It’s no secret that the tech and AI world is currently embroiled in a fierce debate, one that has effectively divided the community into two distinct camps. This division, as revealed by recent sources like TechCrunch and The Economist, isn’t about who has the superior AI model or the most efficient algorithms. No, this debate is much more fundamental and far-reaching. It’s about the future trajectory of AI itself.

On one side of the divide, we have the ‘Doomer’ camp. This group that believes in the philosophy of effective altruism. Prominent tech figures, such as Vinod Khosla and Balaji Srinivasan, belong to this faction. They argue that AI development should be slowed down. Their reasoning? They believe that unchecked AI growth could lead to unintended negative consequences. For these tech luminaries, it’s not just about technological advancement. For them, it’s about ensuring this advancement doesn’t compromise our ethical standards or societal well-being.

Then there’s the other camp – the ‘Bloomers.’ This group firmly believes in maintaining the current speed of AI growth. They argue that slowing down AI development would stifle innovation and prevent society from reaping the extensive benefits of AI. This camp is not dismissive of the potential risks posed by AI. They believe these risks can be managed without putting the brakes on AI development.

Bridging the Divide

This schism in the AI world has led to intense debates, high-profile drama, and even threats of mass walkouts. And it’s not just the tech giants who are taking a stand. A CNN report showed 42% of CEOs believe AI could pose a threat to humanity in the next 5-10 years. This statistic underscores the gravity of the situation and the urgent need for a balanced and thoughtful approach to AI development.

To bridge the schism in the AI world, it’s essential to foster a collaborative environment where both ‘Doomers’ and ‘Bloomers’ can engage in constructive dialogue. Creating a platform for open communication, shared research, and transparent policy-making can help reconcile these divergent views. Incorporating ethical considerations and risk assessments into AI development, led by a diverse panel of experts from various fields, can ensure a more balanced approach. This collaborative effort could pave the way for responsible innovation, where technological advancement and societal welfare are not seen as mutually exclusive but as complementary objectives.

So, where does this leave us? The tech and AI world is at a crossroads, with each camp passionately advocating for its perspective. One thing is clear: the decisions we make today will shape the future of AI. Now, isn’t that a thought worth pondering over?

The Future of OpenAI: Predictions and Possibilities

With all these plot twists and turns, predicting the future of OpenAI is as challenging as deciphering an encrypted code. As the company, its employees, and clients face another day of uncertainty, one thing is clear – the ride isn’t over yet. So, strap in, folks; it’s going to be an exhilarating journey!

If you’re looking to be apart of a community that stays on top of updates like these, join our weekly Mastermind, AI Unplugged. Here, discussion transforms into action, and action catapults you into the league of AI-empowered success stories.